Will Early Red Vs Blue Dvds Be Available Again

DisplayPort vs. HDMI: Which Is Ameliorate For Gaming?

The best gaming monitors are packed with features, but i aspect that frequently gets disregarded is the inclusion of DisplayPort vs. HDMI. What are the differences between the two ports and is using one for connecting to your organization definitively better?

Y'all might recall it'southward a simple matter of hooking upwardly any cable comes with your monitor to your PC and calling information technology a day, only there are differences that tin often mean a loss of refresh rate, colour quality, or both if you're not careful. Here's what you need to know about DisplayPort vs. HDMI connections.

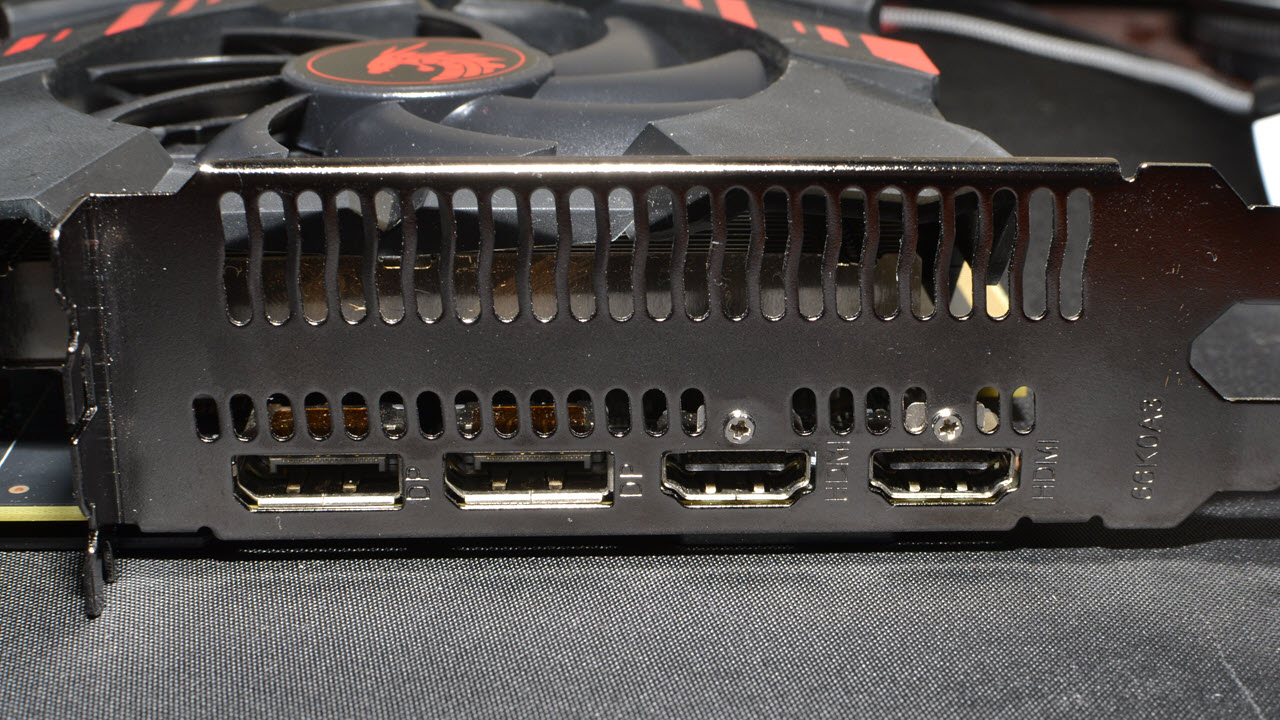

If you're looking to purchase a new PC monitor or buy a new graphics card (you tin can find recommendations on our Best Graphics Cards page), yous'll desire to consider the capabilities of both sides of the connection — the video output of your graphics card and the video input on your display — earlier making any purchases. Our GPU Benchmarks hierarchy volition tell you how the various graphics cards rank in terms of performance, but it doesn't dig into the connectivity options, which is something we'll embrace here.

The Major Display Connection Types

The latest brandish connectivity standards are DisplayPort and HDMI (High-Definition Multimedia Interface). DisplayPort start appeared in 2006, while HDMI came out in 2002. Both are digital standards, meaning all the data about the pixels on your screen is represented every bit 0s and 1s as it zips beyond your cable, and it's up to the display to convert that digital information into an epitome on your screen.

Earlier monitors used DVI (Digital Visual Interface) connectors, and going back even farther we had VGA (Video Graphics Assortment) — forth with component RGB, S-Video, blended video, EGA and CGA. You lot don't desire to use VGA or whatever of those others in 2020, though. They're old, meaning, any new GPU likely won't even back up the connector, and even if they did, you'd be using an analog that's decumbent to interference. Yuck.

DVI is the blank minimum yous want to use today, and even that has limitations. It has a lot in common with early HDMI, merely without audio support. It works fine for gaming at 1080p, or 1440p resolution if y'all have a dual-link connection. Dual-link DVI-D is basically double the bandwidth of single-link DVI-D via extra pins and wires, and nearly modern GPUs with a DVI port support dual-link.

If you're wondering virtually Thunderbolt ii/3, information technology actually just routes DisplayPort over the Thunderbolt connection. Thunderbolt 2 supports DisplayPort one.2, and Thunderbolt three supports DisplayPort 1.iv video. It'southward too possible to route HDMI 2.0 over Thunderbolt three with the right hardware.

For newer displays it's best to go with DisplayPort or HDMI. Simply is there a clear winner between the two?

DisplayPort vs. HDMI: Specs and Resolutions

Not all DisplayPort and HDMI ports are created equal. The DisplayPort and HDMI standards are astern compatible, pregnant yous tin plug in an HDTV from the mid-00s and it should still work with a brand new RTX 20-series or RX 5000-series graphics card. All the same, the connexion between your display and graphics card will terminate up using the best option supported past both the sending and receiving ends of the connection. That might mean the all-time 4K gaming monitor with 144 Hz and HDR volition stop up running at 4K and 24 Hz on an older graphics card!

Here's a quick overview of the major DisplayPort and HDMI revisions, their maximum signal rates and the GPU families that offset added back up for the standard.

| | Max Transmission Rate | Max Data Rate | Resolution/Refresh Charge per unit Support (24 bpp) | GPU Introduction |

|---|---|---|---|---|

| DisplayPort Versions | | | | |

| 1.0-1.1a | 10.viii Gbps | 8.64 Gbps | 1080p @ 144 Hz | AMD Hd 3000 (R600) |

| | | | 4K @ thirty Hz | Nvidia GeForce 9 (Tesla) |

| 1.ii-1.2a | 21.6 Gbps | 17.28 Gbps | 1080p @ 240 Hz | AMD Hd 6000 (Northern Islands) |

| | | | 4K @ 75 Hz | Nvidia GK100 (Kepler) |

| | | | 5K @ 30 Hz | |

| 1.three | 32.4 Gbps | 25.92 Gbps | 1080p @ 360 Hz | AMD RX 400 (Polaris) |

| | | | 4K @ 120 Hz | Nvidia GM100 (Maxwell 1) |

| | | | 5K @ 60 Hz | |

| | | | 8K @ 30 Hz | |

| 1.4-1.4a | 32.4 Gbps | 25.92 Gbps | 8K @ 120 Hz w/ DSC | AMD RX 400 (Polaris) |

| | | | | Nvidia GM200 (Maxwell 2) |

| 2 | 80.0 Gbps | 77.37 Gbps | 4K @ 240 Hz | Future GPUs |

| | | | 8K @ 85 Hz | |

| HDMI Versions | | | | |

| 1.0-i.2a | iv.95 Gbps | three.96 Gbps | 1080p @ 60 Hz | AMD Hard disk drive 2000 (R600) |

| | | | | Nvidia GeForce nine (Tesla) |

| ane.three-1.4b | 10.ii Gbps | viii.16 Gbps | 1080p @ 144 Hz | AMD HD 5000 |

| | | | 1440p @ 75 Hz | Nvidia GK100 (Kepler) |

| | | | 4K @ 30 Hz | |

| | | | 4K four:2:0 @ lx Hz | |

| ii.0-ii.0b | 18.0 Gbps | 14.4 Gbps | 1080p @ 240 Hz | AMD RX 400 (Polaris) |

| | | | 4K @ 60 Hz | Nvidia GM200 (Maxwell 2) |

| | | | 8K 4:2:0 @ 30 Hz | |

| 2.1 | 48.0 Gbps | 42.half-dozen Gbps | 4K @ 144 Hz (240 Hz w/DSC) | Partial two.1 VRR on Nvidia Turing |

| | | | 8K @ 30 Hz (120 Hz west/DSC) | |

Note that there are 2 bandwidth columns: manual rate and data rate. The DisplayPort and HDMI digital signals use bitrate encoding of some grade — 8b/10b for most of the older standards, 16b/18b for HDMI two.one, and 128b/132b for DisplayPort 2.0. 8b/10b encoding for case ways for every eight $.25 of information, x bits are actually transmitted, with the extra bits used to help maintain signal integrity (eg, by ensuring nada DC bias).

That ways only 80% of the theoretical bandwidth is really bachelor for information use with 8b/10b. 16b/18b encoding improves that to 88.9% efficiency, while 128b/132b encoding yields 97% efficiency. There are all the same other considerations, like the auxiliary channel on HDMI, but that's non a major factor.

Let's Talk More than Most Bandwidth

To understand the above nautical chart in context, we need to get deeper. What all digital connections — DisplayPort, HDMI and even DVI-D — cease up coming down to is the required bandwidth. Every pixel on your display has three components: reddish, green and blue (RGB) — alternatively: luma, blue chroma difference and red chroma difference (YCbCr/YPbPr) tin can be used. Whatever your GPU renders internally (typically 16-scrap floating betoken RGBA, where A is the alpha/transparency data), that data gets converted into a signal for your display.

The standard in the by has been 24-bit color, or viii bits each for the ruby, greenish and bluish color components. HDR and high colour depth displays have bumped that to 10-bit colour, with 12-flake and 16-fleck options as well, though the latter two are mostly in the professional person infinite right now. Generally speaking, display signals use either 24 bits per pixel (bpp) or thirty bpp, with the best HDR monitors opting for thirty bpp. Multiply the colour depth by the number of pixels and the screen refresh rate and you get the minimum required bandwidth. Nosotros say 'minimum' considering at that place are a bunch of other factors besides.

Brandish timings are relatively complex calculations. The VESA governing body defines the standards, and there's even a handy spreadsheet that spits out the bodily timings for a given resolution. A 1920x1080 monitor at a 60 Hz refresh rate, for instance, uses two,000 pixels per horizontal line and 1,111 lines in one case all the timing stuff is added. That'due south considering display blanking intervals need to be factored in. (These blanking intervals are partly a holdover from the analog CRT screen days, but the standards even so include it even with digital displays.)

Using the VESA spreadsheet and running the calculations gives the following bandwidth requirements. Look at the post-obit table and compare information technology with the first table; if the required data bandwidth is less than the max data rate that a standard supports, and then the resolution can be used.

| Resolution | Color Depth | Refresh Rate (Hz) | Required Data Bandwidth |

|---|---|---|---|

| 1920 10 1080 | 8-flake | sixty | 3.20 Gbps |

| 1920 ten 1080 | 10-bit | 60 | 4.00 Gbps |

| 1920 x 1080 | 8-bit | 144 | 8.00 Gbps |

| 1920 ten 1080 | ten-bit | 144 | ten.00 Gbps |

| 2560 10 1440 | 8-bit | 60 | 5.63 Gbps |

| 2560 x 1440 | ten-bit | 60 | 7.04 Gbps |

| 2560 x 1440 | 8-bit | 144 | xiv.08 Gbps |

| 2560 x 1440 | 10-bit | 144 | 17.60 Gbps |

| 3840 ten 2160 | 8-bit | threescore | 12.54 Gbps |

| 3840 10 2160 | 10-bit | 60 | xv.68 Gbps |

| 3840 x 2160 | eight-bit | 144 | 31.35 Gbps |

| 3840 x 2160 | 10-bit | 144 | 39.19 Gbps |

The above figures are all uncompressed signals, nevertheless. DisplayPort 1.4 added the pick of Brandish Stream Pinch 1.2a (DSC), which is also role of HDMI 2.one. In short, DSC helps overcome bandwidth limitations, which are becoming increasingly problematic as resolutions and refresh rates increment. For example, basic 24 bpp at 8K and sixty Hz needs 49.65 Gbps of data bandwidth, or 62.06 Gbps for 10 bpp HDR colour. 8K 120 Hz x bpp HDR, a resolution that we're likely to see more of in the futurity, needs 127.75 Gbps. Yikes!

DSC tin can provide up to a 3:i compression ratio past converting to 4:2:2 or 4:ii:0 YCgCo and using delta PCM encoding. It provides a "visually lossless" (or well-nigh so, depending on what you're viewing) result, particularly for video (ie, picture show) signals. Using DSC, 8K 120 Hz HDR is suddenly viable, with a bandwidth requirement of 'simply' 42.58 Gbps.

Both HDMI and DisplayPort tin can also carry audio data, which requires bandwidth also, though information technology's a minuscule amount compared to the video information. DisplayPort and HDMI currently use a maximum of 36.86 Mbps for audio, or 0.037 Gbps if we go on things in the aforementioned units as video. Earlier versions of each standard can utilise even less information for audio.

That's a lengthy introduction to a complex discipline, merely if yous've always wondered why the uncomplicated math (resolution * refresh rate * colour depth) doesn't friction match published specs, it's because of all the timing standards, encoding, audio and more. Bandwidth isn't the just cistron, just in general, the standard with a college maximum bandwidth is 'better.'

DisplayPort: The PC Choice

Currently DisplayPort ane.4 is the near capable and readily available version of the DisplayPort standard. The DisplayPort two.0 spec came out in June 2019, simply there still aren't any graphics cards or displays using the new version. We idea that would alter with the launch of AMD's 'Big Navi' (aka Navi 2x, aka RDNA 2) and Nvidia'south Ampere GPUs, but both stick with DisplayPort 1.4a. DisplayPort i.iv doesn't have as much bandwidth bachelor equally HDMI two.1, but it's sufficient for up to 8K 60Hz with DPC, and HDMI 2.one hardware isn't actually available for PCs even so.

One advantage of DisplayPort is that variable refresh rates (VRR) have been part of the standard since DisplayPort 1.2a. We likewise like the robust DisplayPort (just not mini-DisplayPort) connector, which has hooks that latch into place to go along cables secure. It'southward a small-scale affair, only we've definitely pulled loose more than than a few HDMI cables by accident. DisplayPort tin can too connect multiple screens to a single port via Multi-Stream Transport (MST), and the DisplayPort point can be piped over a USB Type-C connector that besides supports MST.

One area where there has been some confusion is in regards to licensing and royaltees. DisplayPort was supposed to be a less expensive standard (at least, that's how I remember it being proposed back in the solar day). But today, both HDMI and DisplayPort accept various associated brands, trademarks, and patents that have to exist licensed. With various associated technologies like HDCP (High-bandwidth Digital Content Protection), DSC, and more, companies have to pay a royalty for DP just similar HDMI. The electric current rate appears to exist $0.xx per production with a DisplayPort interface, with a cap of $seven million per twelvemonth. HDMI charges $0.fifteen per product, or $0.05 if the HDMI logo is used in promotional materials.

Because the standard has evolved over the years, not all DisplayPort cables will piece of work properly at the latest speeds. The original Display 1.0-1.1a spec allowed for RBR (reduced chip rate) and HBR (high bit rate) cables, capable of 5.xviii Gbps and 8.64 Gbps of information bandwidth, respectively. DisplayPort 1.2 introduced HBR2, doubled the maximum information bit rate to 17.28 Gbps and is compatible with standard HBR DisplayPort cables. HBR3 with DisplayPort 1.3-1.4a increased things again to 25.92 Gbps, and added the requirement of DP8K DisplayPort certified cables.

Finally, with DisplayPort two.0 there are three new transmission modes: UHBR 10 (ultra high bit rate), UHBR thirteen.5 and UHBR 20. The number refers to the bandwidth of each lane, and DisplayPort uses four lanes, so UHBR ten offers up to twoscore Gbps of transmission rate, UHBR 13.5 can do 54 Gbps and UHBR xx peaks at 80 Gbps. All 3 UHBR standards are compatible with the same DP8K-certified cables, thankfully, and use 128b/132b encoding, meaning data bit rates of 38.69 Gbs, 52.22 Gbps, and 77.37 Gbps.

Officially, the maximum length of a DisplayPort cable is up to 3m (9.;8 feet), which is one of the potential drawbacks, particularly for consumer electronics use.

With a maximum data rate of 25.92 Gbps, DisplayPort 1.four can handle 4K resolution 24-bit color at 98 Hz, and dropping to four:2:2 YCbCr gets it to 144 Hz with HDR. Keep in mind that 4K HDR monitors running at 144 Hz withal cost a premium, and then gamers will more likely be looking at something similar a 144Hz display at 1440p. That only requires 14;08 Gbps for 24-bit colour or 17.lx Gbps for 30-bit HDR, which DP one.4 tin can easily handle.

If you're wondering almost 8K content in the future, the reality is that even though it's achievable right at present via DSC and DisplayPort 1.4a, the displays and PC hardware needed to bulldoze such displays aren't generally inside reach of consumer budgets. (GeForce RTX 3090 may change that, but it seems as though HDMI two.1 will exist the mode to go in that location.) By the fourth dimension 8K becomes a viable resolution, nosotros'll have gone through a couple of more than generations of GPUs.

HDMI: Ubiquitous Consumer Electronics

Updates to HDMI have kept the standard relevant for over 16 years. The earliest versions of HDMI have go outdated, but subsequently versions accept increased bandwidth and features.

HDMI ii.0b and earlier are 'worse' in some ways compared to DisplayPort 1.four, simply if you're not trying to run at extremely high resolutions or refresh rates, y'all probably won't notice the difference. Full 24-bit RGB color at 4K sixty Hz has been available since HDMI ii.0 released in 2013, and higher resolutions and/or refresh rates are possible with iv:2:0 YCbCr output — though you mostly don't want to use that with PC text, every bit it can make the edges wait fuzzy.

For AMD FreeSync users, HDMI has also supported VRR via an AMD extension since 2.0b, but HDMI two.1 is where VRR became part of the official standard. So far, only Nvidia has support for HDMI 2.1 VRR on its Turing and upcoming Ampere GPUs, which is used on LG's 2019 OLED TVs. That volition likely change once AMD'southward 'Big Navi' GPUs are released, and we expect full HDMI 2.1 support from Nvidia's Ampere GPUs equally well. If you own a Turing or earlier generation Nvidia GPU, outside of specific scenarios like the LG TVs, you're generally ameliorate off using DisplayPort for the fourth dimension being.

One major advantage of HDMI is that information technology'south ubiquitous. Millions of devices with HDMI shipped in 2004 when the standard was immature, and information technology's now found everywhere. These days, consumer electronics devices like TVs often include support for three or more HDMI ports. What's more, TVs and consumer electronics hardware has already started aircraft HDMI 2.one devices, even though no PC graphics cards support the full 2.ane spec yet. (The GeForce RTX 3070 and to a higher place have at least ane HDMI 2.1 port.)

HDMI cablevision requirements have changed over time, just similar DisplayPort. One of the big advantages is that high quality HDMI cables can be up to 15m (49.2 feet) in length — five times longer than DisplayPort. That may not exist of import for a brandish sitting on your desk, simply information technology can definitely matter for abode theater employ. Originally, HDMI had ii categories of cables: category 1 or standard HDMI cables are intended for lower resolutions and/or shorter runs, and category 2 or "High Speed" HDMI cables are capable of 1080p at 60 Hz and 4K at 30 Hz with lengths of up to 15m.

More recently, HDMI 2.0 introduced "Premium High Speed" cables certified to meet the 18 Gbps bit rate, and HDMI two.1 has created a fourth class of cablevision, "Ultra Loftier Speed" HDMI that tin handle upward to 48 Gbps. HDMI besides provides for routing Ethernet signals over the HDMI cable, though this is rarely used in the PC space.

We mentioned licensing fees earlier, and while HDMI Technology doesn't explicitly land the toll, this website details the various HDMI licencing fees every bit of 2014. The short summary: for a high volume business organisation making a lot of cables or devices, information technology's $10,000 annually, and $0.05 per HDMI port provided HDCP (High Definition Content Protection) is used and the HDMI logo is displayed in marketing fabric. In other words, the cost to end users is hands captivated in most cases — unless some bean counter comes down with a instance of extreme penny pinching.

Like DisplayPort, HDMI also supports HDCP to protect the content from being copied. That's a divide licensing fee, naturally (though it reduces the HDMI fee). HDMI has supported HDCP since the commencement, starting at HDCP one.ane and reaching HDCP 2.2 with HDMI 2.0. HDCP can cause issues with longer cables, and ultimately it appears to badger consumers more than the pirates. At nowadays, known hacks / workarounds to strip HDCP ii.2 from video signals can be found.

DisplayPort vs. HDMI: The Bottom Line for Gamers

We've covered the technical details of DisplayPort and HDMI, merely which one is actually better for gaming? Some of that will depend on the hardware you already own or intend to purchase. Both standards are capable of delivering a skillful gaming experience, but if yous want a great gaming experience, right now DisplayPort one.4 is more often than not better than HDMI 2.0, HDMI 2.ane technically beats DP one.4, and DisplayPort 2.0 should trump HDMI ii.ane. The problem is, you'll need to buy a Television set rather than a monitor to get HDMI 2.ane right now, and we're not sure when DP 2.0 hardware will start shipping (RTX 40-serial maybe).

For Nvidia gamers, your best option correct now is a DisplayPort i.four connection to a G-Sync display. If you buy a new GeForce RTX 30-series card, yet, HDMI 2.1 might be ameliorate (and it will probably be required if you desire to connect your PC to a TV). Over again, the only G-Sync Uniform displays out at present with HDMI 2.i are TVs. Unless y'all're planning on gaming on the big screen in the living room, you're better off with DisplayPort right now. Ampere supports HDMI 2.ane but sticks with DP 1.iv, and K-Sync PC monitors are probable to proceed prioritizing DisplayPort.

AMD gamers may accept a few more options, as there are inexpensive FreeSync monitors with HDMI available. However, DisplayPort is withal the preferred standard for PC monitors. Information technology's easier to find a display that can do 144 Hz over DisplayPort with FreeSync, where a lot of HDMI FreeSync displays only work at lower resolutions or refresh rates. HDMI 2.one meanwhile is only supported on the latest RX 6000-series GPUs, but DisplayPort 2.0 back up apparently won't be coming for at least one more generation of GPUs.

What if you lot already have a monitor that isn't running at college refresh rates or doesn't have G-Sync or FreeSync adequacy, and information technology has both HDMI and DisplayPort inputs? Assuming your graphics menu also supports both connections (and it probably does if information technology's a menu made in the by five years), in many instances the choice of connection won't really affair.

2560x1440 at a fixed 144 Hz refresh charge per unit and 24-bit colour works just fine on DisplayPort i.two or higher, as well as HDMI two.0 or higher. Annihilation lower than that volition also work without trouble on either connection type. About the only caveat is that sometimes HDMI connections on a monitor will default to a express RGB range, just you can correct that in the AMD or Nvidia display options. (This is because old Telly standards used a limited color range, and some mod displays withal think that's a skillful thought. News wink: information technology's not.)

Other employ cases might push you lot toward DisplayPort as well, like if y'all desire to use MST to have multiple displays daisy chained from a unmarried port. That'due south not a very common scenario, but DisplayPort does make it possible. Home theater use on the other mitt continues to prefer HDMI, and the auxiliary channel can improve universal remote compatibility. If you're hooking upwards your PC to a Goggle box, HDMI is usually required, as in that location aren't many TVs that take a DisplayPort input (BFGDs similar the HP Omen Ten existence one of the few — very expensive! — exceptions).

Ultimately, while there are specs advantages to DisplayPort, and some features on HDMI that can make it a amend choice for consumer electronics use, the ii standards end upwardly overlapping in many areas. The VESA standards grouping in charge of DisplayPort has its eyes on PC adoption growth, whereas HDMI is defined by a consumer electronics consortium and thinks about TVs first. But DisplayPort and HDMI end upwardly with like capabilities. Y'all can practise 4K at 60 Hz on both standards without DSC, so information technology'southward simply 8K or 4K at refresh rates above 60 Hz where you actually run across limitations on contempo GPUs.

Source: https://www.tomshardware.com/features/displayport-vs-hdmi-better-for-gaming

0 Response to "Will Early Red Vs Blue Dvds Be Available Again"

Post a Comment